Neural Thompson Sampling (NeuralTS) was introduced by Zhang et al. (2021) (Reference 1) as a way to introduce Thompson sampling (TS) to neural network prediction models. In this post, we introduce Thompson sampling, outline the problem setting for NeuralTS, describe the NeuralTS algorithm and give a bit more detail on how we can derive it.

Introduction to Thompson sampling (TS)

Thompson sampling is an algorithm for online decision problems. Imagine that we have to make a sequence of sequences across time periods. In each time period

, we make an action

. The system receives the action and generates a response according to the conditional probability distribution

, and we get a reward

. Our goal is to maximize our expected reward across the

time periods. The value of

is assumed to be unknown to us.

While we don’t know the value of , we can use information from previous rounds to get better and better estimates of

. In particular, we can use the Bayesian paradigm to do so. We start with an initial prior distribution for

:

. After each round, we get an updated posterior distribution for

, which we can use to make decisions for future rounds.

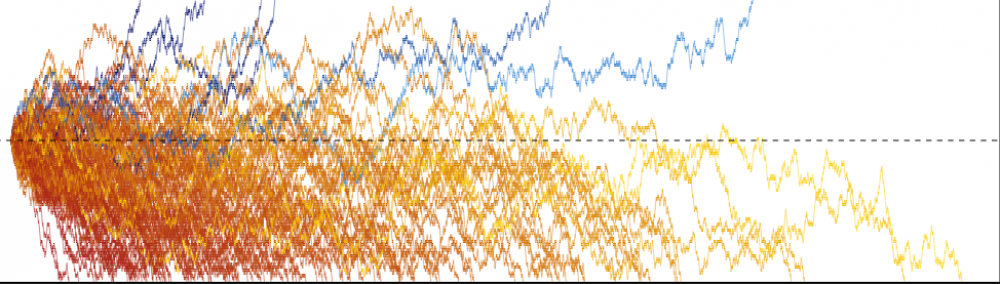

There are many ways to use our estimate of to choose an action in each round. In the greedy approach, we compute a “most likely” value of

(e.g. the mean of the posterior distribution of

), then take the action that maximizes the reward under this value of

. In Thompson sampling, we take a sample of

from the posterior distribution, then take the action that maximizes the reward under the sampled

. The difference between the two approaches is shown in the figure below taken from Russo et al. (2018) (Reference 2).

Contextual bandits

In the setting above (sometimes called the “context-free bandit setting”), in each round we choose an action based solely on our understanding of . In the contextual bandit setting, in each round

, we are additionally given a feature vector

representing specific information for round

. Our job is to choose an action

, and the system will generate a reward or outcome according to

. (Note the different notation in this section, it’s to be consistent with the NeuralTS paper.)

An example of the contextual bandit setting is selecting the top story to headline a news website for each user. Each request for a top story corresponds to a round, each story that could be shown represents an action, and the context vector could be features about the user (e.g. age, interests, past browsing history).

Instead of limiting the context vector to just user features, the context vector can also include arm features or features from the (user, arm) interaction.

NeuralTS

NeuralTS operates in the contextual bandit setting. Assume that we have different arms/actions and we have to make decisions across

rounds. In each round

, we get

contextual vectors

, one for each arm. NeuralTS assumes that the reward for a given context vector

is the output of a neural network

of depth

:

Let denote the vector of parameters for the neural network. If we apply Thompson sampling directly to this setting, in each round we would sample the network parameters

from its posterior distribution, then choose the action that maximizes the reward:

. While workable, this becomes computationally difficult when the network is large.

Instead of sampling from the posterior distribution of , NeuralTS samples from the posterior distribution of the scalar rewards

. Here is the algorithm as written in the paper:

For completeness, , and here is equation (2.3) referenced in the Algorithm:

While it might look intimidating, NeuralTS is basically using the Laplace method to approximate the output of the neural network as a Gaussian distribution with suitably chosen parameters. It maintains a Gaussian distribution for each arm’s reward. To select an arm, we sample the reward for each arm from the reward’s posterior distribution, then pull the greedy arm. Details on the Laplace method can be found in this previous post. Here are some additional notes:

- Line 1:

represents the estimated covariance matrix of

at time 0 (i.e. the prior distribution).

- Line 2: This is the initialization for the neural network parameters.

- Line 5: This looks like the formula for posterior variance of the target variable in the previous post but with some minor differences (it removes the additive

term but has a multiplicative

term).

- Line 6: NeuralTS adds an additional hyperparameter,

, used to control how much exploration the algorithm will do. Larger values of

correspond to more exploration. The original Laplace method has

.

- Line 9: In the original Laplace method, we set

to be the minimizer of a least-squares regression problem: equation (2.3) above with

. In contrast, NeuralTS takes

to be the minimizer of an

-regularized least-squares problem.

- Line 10: This is the posterior variance update for the neural network parameters.

References:

- Zhang, W. et al. (2021). “Neural Thompson Sampling.“

- Russo et al. (2018). A Tutorial on Thompson Sampling.