In this previous post, I introduced the concept of an equicorrelation matrix, i.e. an matrix where the entries on the diagonal are all equal to

and all off-diagonal entries are equal to some parameter

which lies in

:

There, I noted that for the matrix to be positive definite, we need . (Alternatively,

is equivalent to

being positive semidefinite.)

It might seem a little strange that has a non-trivial lower bound but no non-trivial upper bound. A quick direct proof of the lower bound follows from the fact that the determinant of a positive semidefinite matrix must be non-negative. We can explicitly compute the determinant of the equicorrelation matrix, so

Here’s another proof of the lower bound that might be slightly more illuminating. Recall that being (symmetic) positive semidefinite is equivalent to the existence of random variables

such that the random vector

has covariance matrix

. Hence,

Now for some intuition. When , the lower bound is the trivial

, which can be achieved when

. Now, imagine that

: how can we add

so that the pairwise correlations are minimized (i.e. be most negative)? There is an inherent tension: the more negatively correlated we make

with

, the more positively correlated

becomes with

. So there is no way for

for every pair

.

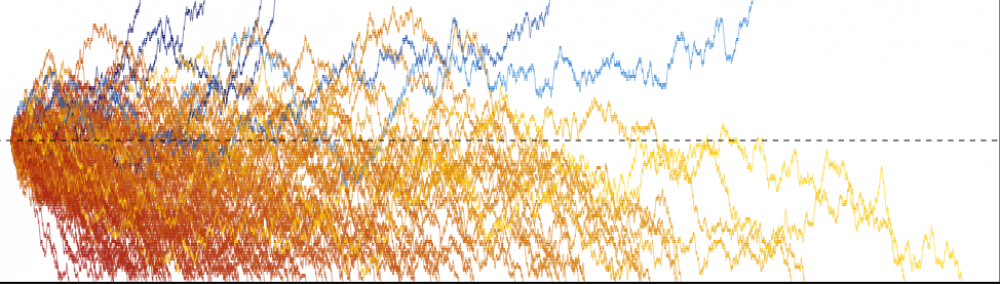

One analogy that could be helpful is to think of each as a magnet that only repels, and we are trying to squeeze all the magnets into one box. As we add more and more magnets, the magnets get closer and closer together (even though they are still trying to repel each other). We don’t get the same problem with magnets that only attract: they all simply stick to each other at a single point, no matter how many magnets we add.