Elastic weight consolidation (EWC) is a technique proposed by Kirkpatrick et al. (2016) (Reference 1) as a way for avoiding catastrophic forgetting in neural networks.

Set-up and notation

Imagine that we want to train a neural network to be able to perform well on several different tasks. In real-world settings, it’s not always possible to have all the data from all tasks available at the beginning of model training, so we want our model to be able to learn continually: “that is, [have] the ability to learn consecutive tasks without forgetting how to perform previously trained tasks.”

This turns out to be difficult for neural networks because of a phenomenon known as catastrophic forgetting. Assume that I have already trained a model to be good at one task, task A. Now, when I further train this model to be good at a second task, task B, performance on task A tends to degrade, with the performance drop often happening abruptly (hence “catastrophic”). This happens because “the weights in the network that are important for task A are changed to meet the objectives of task B”.

Let’s rewrite the previous paragraph with some mathematical notation. Assume that we have training data and

for tasks A and B respectively. Let

. Our neural network is parameterized by

: model training corresponds to finding an “optimal” value of

.

Let’s say that training on task A gives us the network parameters . Catastrophic forgetting says that when further training on task B (to reach new optimal parameters

),

is too far away from

and hence has poor performance on task A.

Elastic weight consolidation (EWC)

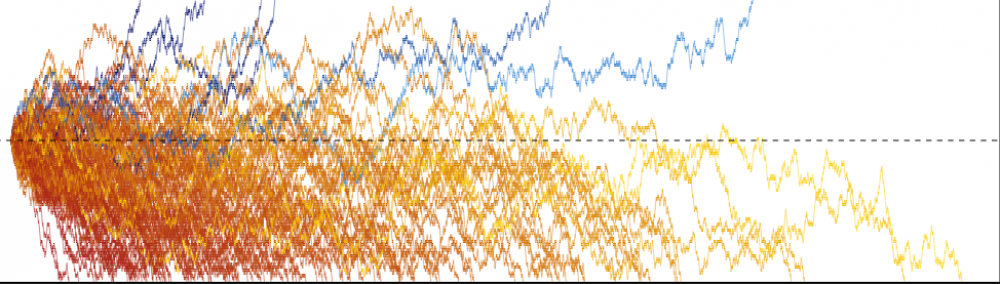

The intuition behind elastic weight consolidation (EWC) is the following: since large neural networks tend to be over-parameterized, it is likely that for any one task, there are many values of that will give similar performance. Hence, when further training on task B, we should constrain the model parameters to be “close” to

in some sense. The figure below (Figure 1 from Reference 1) illustrates this intuition:

How do we make this intuition concrete? Assume that we are in a Bayesian setting. What we would like to do is maximize the (log) posterior probability of

How do we make this intuition concrete? Assume that we are in a Bayesian setting. What we would like to do is maximize the (log) posterior probability of given all the data

:

Note that the equation above applies if we replace all instances of with

or

. Assuming the data for tasks A and B are independent, let’s do some rewriting of the equation above:

Thus, maximizing the log posterior probability of is equivalent to maximizing

Assume that we have already trained our model in some way to get to which does well on task A. We recognize the first term above as the log-likelihood function for task B’s training data: maximizing just the first term alone corresponds to the usual maximum likelihood estimation (MLE). For the second term, we can approximate the posterior distribution of

based on task A’s data as a Gaussian distribution centered around

with some covariance. (This is known as the Laplace approximation). Simplifying even further: instead of the distribution having precision matrix equal to the full Fisher information matrix

, we assume that the precision matrix is diagonal having the same values as the diagonal of

.

Putting it altogether, maximizing is equivalent to minimizing

where is the loss for task B only,

indexes the weights and

is an overall hyperparameter that balances the importance of the two tasks. EWC minimizes

.

Why the name “elastic weight consolidation”? From the authors:

“This constraint is implemented as a quadratic penalty, and can therefore be imagined as a spring anchoring the parameters to the previous solution, hence the name elastic. Importantly, the stiffness of this spring should not be the same for all parameters; rather, it should be greater for those parameters that matter most to the performance during task A.”

References:

- Kirkpartick, J., et al. (2016). Overcoming catastrophic forgetting in neural networks.