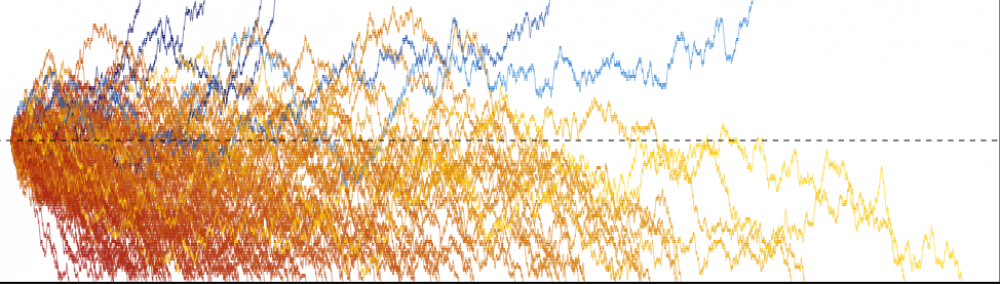

I’ve been reading through Kohavi’s et al.’s Trustworthy Online Controlled Experiments, which gives a ton of great advice on how to do A/B testing well. This post is about one nugget regarding the -test and outliers that I did not know before.

(This appears in Chapter 19 of the book, “The A/A Test”. In the book they mention this issue in the context of computing the -values for several two-sample

-tests. In this post I use one one-sample

-test because it is easier to illustrate the point with fewer sources of randomness.)

Imagine we are doing a one-sample -test with 100 observations. Say our observations are generated in the following way:

set.seed(1) x <- runif(100, min = 0, max = 1) + 10 x[100] <- 100000

Every single value in x is much higher zero. We have this one huge outlier, but it’s in the “right” direction (i.e. greater than all values in x, much greater than zero). If we run a two-sided -test with this data for the null hypothesis that the mean of the data generating distribution is equal to zero, we should get a really small

-value right?

Wrong! Here is the R output:

t.test(x)$p.value # [1] 0.3147106

The problem is because of that outlier that x has. Sure, it increases the mean of x by a lot, but it also increases the variance a ton! Remember that the -statistic is a ratio of the mean estimate to its sample standard deviation, so the outlier’s effects on the mean and variance “cancel” each other.

Here’s a sketch of the mathematical argument. Assume the outlier has value , and that there are

observations in each sample. When the outlier is very large, it dominates the mean estimate, so the numerator of the

-statistic is something like

. It also dominates the variance estimate:

Hence, the -statistic is roughly

depending on whether is positive or negative. Hence, the two-sided

-value is approximately

:

2 * pnorm(-1) # [1] 0.3173105

Another way to understand this intuitively is to make a plot of the data. First, let’s plot the data without the outlier:

plot(x[1:99], pch = 16, cex = 0.5, ylim = c(0, max(x[1:99])),

xlab = "Observation index", ylab = "Value",

main = "Plot of values without outlier")

abline(h = 0, col = "red", lty = 2)

It’s obvious here that the variation in x pales in comparison to the distance of x from the line, so we expect a very small

-value. (In fact, R tells me the

-value is

!) Next, let’s plot the data with the outlier:

plot(x, pch = 16, cex = 0.5, ylim = c(0, max(x)),

xlab = "Observation index", ylab = "Value",

main = "Plot of values with outlier")

abline(h = 0, col = "red", lty = 2)

Not so obvious that the mean of x is different from zero now right?