The logit function is defined as

where . The logit function transforms a variable constrained to the unit interval (usually a probability) to one that can take on any real value. In statistics, most people encounter the logit function in logistic regression, where we assume that the probability of a binary response

being 1 is associated with features

through the relationship

Because the logit can take on any real value, it makes more sense to model the logit of a probability as above instead of modeling the probability directly like

If we model the probability directly, it is possible that results in a value that lies outside the unit interval; in that situation we would have to do some post-processing (e.g. thresholding) to get valid probabilities.

The expit function is simply the inverse of the logit function. It is defined as

It takes any real number and transforms it to a value in

.

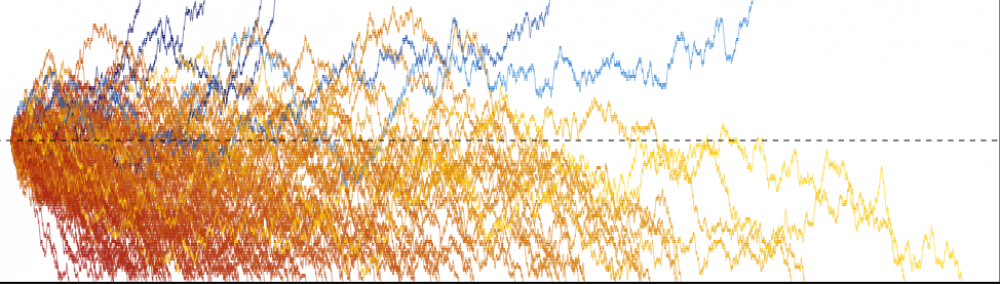

The picture below shows what the logit and expit functions look like. (Since they are inverses of each other, their graphs are reflections of each other across the line.)

We can think of the expit function as a special case of the softmax function. If we define the softmax function for variables as

then when ,